Innovative machine learning methods can be used in multiple remote sensing applications. Counting and getting an accurate assessment of an area are two of them.

When Nate Krause, the operations manager of Swans Trail Farms, invited us to do some flights over their pumpkin patch in Snohomish (Washington, U.S.) to accurately quantify and categorize all the pumpkins.

In the past, AI/ML algorithms required data from a separate RGB camera. Now we are able to take high-resolution RGB composites and multispectral imagery at the same time, thanks to the panchromatic bands of our RedEdge-P or Altum-PT drone sensors.

For this mission, the RedEdge-P multispectral and panchromatic sensor from the MicaSense series was selected, with the DJI Matrice 300 drone and Measure Ground Control mission planner. The 23-acre / 9-ha site was flown at 60 m / 196 ft AGL, to achieve 2 cm – 0.7 in/pixel GSD in the pan-sharpened data.

Processing and classifying the data

Once the flight was done, it was time to process the data and get the pumpkins counted and sized. For processing and pan-sharpening the data, we used Agisoft Metashape.

We then extracted the five-layer multispectral Geotiff of 2 cm – 0,7 in /pixel and brought it into QGIS to get an overview of the pumpkin patch. The pumpkins were semi-automatically classified using simple multispectral thresholding techniques on a small subset of the orthomosaic.

While this pixel-based approach was effective for general classification, it could not segment individual instances of pumpkins close to each other. To solve this problem, we manually edited any clumped detections and built a deep learning model with TensorFlow using the already-generated semi-automated detections as the input training data.

The model was fed 2 cm / 0.7 in pan-sharpened RGB imagery from the RedEdge-P alongside our polygon detections. The high-resolution data was critical to allow the model to learn effectively and to have the ability to distinguish between very close but separate instances of pumpkins.

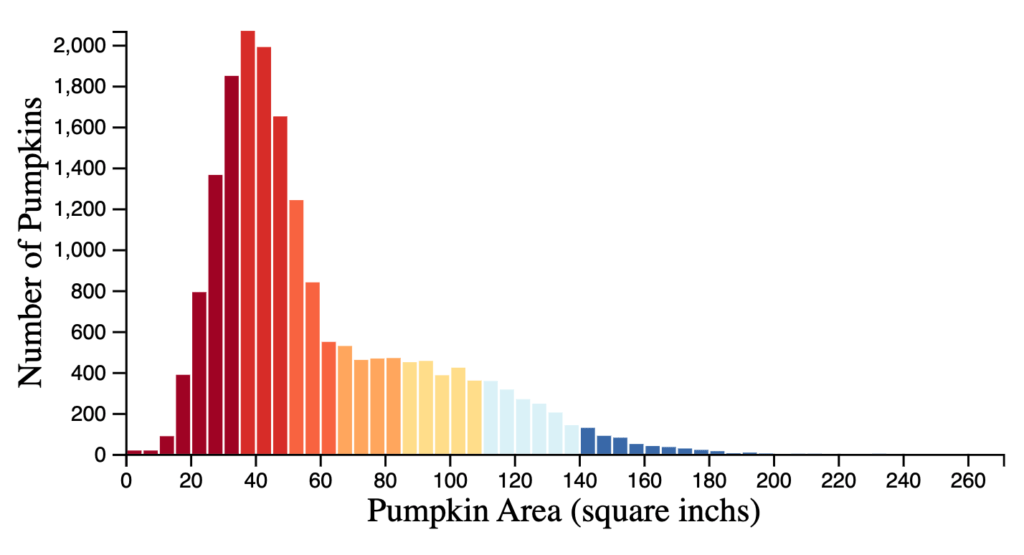

We were interested in generating accurate counts of pumpkins and getting an estimate of their size and the field area. This could help us generate predictions on potential profits/losses for the crop, allow for temporal comparisons across years, determine count rates across time, and germination rates.

The techniques used here are versatile and easily transferred to other sectors.

For example, we could perform this same analysis process for forestry stand counts, detecting early-emergent corn or other small plants of interest. We can detect features, output counts, or statistics based on the reflectance of our detected features. This will allow us to monitor and manage farms, forests, and our environmental resources more efficiently and effectively.